Setting up a Kubernetes cluster manually can be repetitive and error-prone. This guide walks you through automating the installation of K3s, configuring the cluster, and deploying tools like Helm and the NVIDIA GPU Operator, all using Bash scripts executed through CloudRay.

Whether you’re building a lightweight production cluster or a test environment, the process becomes faster, consistent, and easier to manage

Contents

- Adding Servers to CloudRay

- Assumptions

- Create the Automation Script

- Create a Variable Group

- Running the Script with CloudRay

- Troubleshooting

- Related Guide

Adding Servers to CloudRay

Before getting started with your automation, make sure your target servers are connected to CloudRay. If you haven’t done this yet, follow our servers docs to add and manage your server

NOTE

This guide uses Bash scripts, providing a high degree of customisation. You can adapt the scripts to fit your specific Installations needs and environment.

Assumptions

- This guide assumes you’re using Ubuntu 24.04 LTS as your server’s operating system. If you’re using a different version or a different distribution, adjust the commands accordingly

- You intend to deploy K3s as a single-node cluster. If using a multi-node setup, additional steps such as node joining must be performed

- Your server has NVIDIA drivers installed if you plan to use GPU workloads

Create the Automation Script

To automate the installation, you’ll need two Bash scripts:

- Install and Configure Cluster Script: This script will installs K3s and configures the environment

- Install Helm and Deploy NVIDIA Script: This script will installs Helm and deploys the NVIDIA GPU Operator

Let’s begin with the installation and configuration of the cluster.

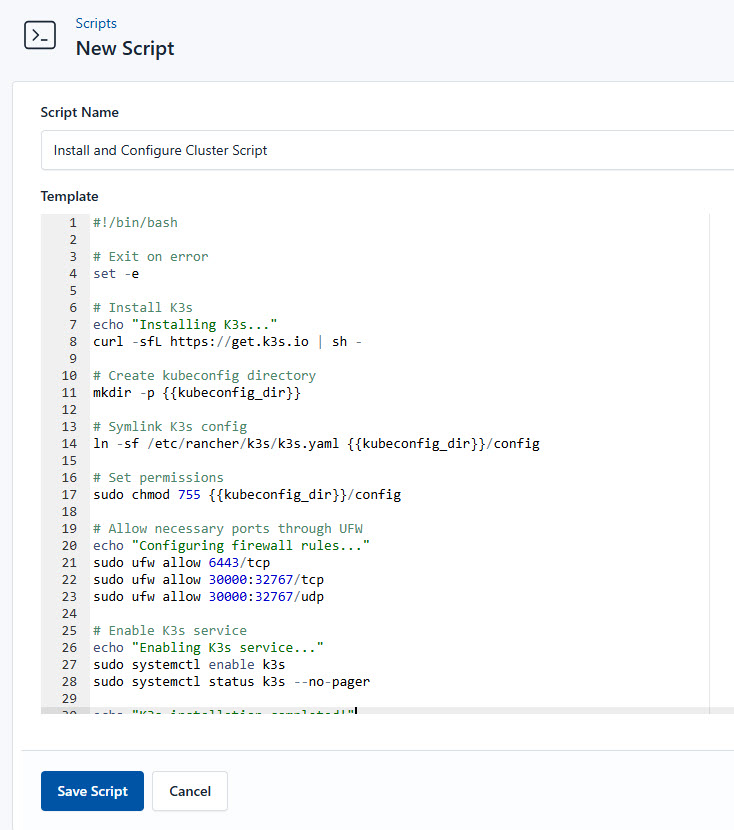

Install and Configure Cluster Script

To create the Install and Configure Cluster Script, you need to follow these steps:

- Go to Scripts in your CloudRay project

- Click New Script

- Name:

Install and Configure Cluster Script. You can give it any name of your choice - Copy this code:

#!/bin/bash

# Exit on error

set -e

# Install K3s

echo "Installing K3s..."

curl -sfL https://get.k3s.io | sh -

# Create kubeconfig directory

mkdir -p {{kubeconfig_dir}}

# Symlink K3s config

ln -sf /etc/rancher/k3s/k3s.yaml {{kubeconfig_dir}}/config

# Set permissions

sudo chmod 755 {{kubeconfig_dir}}/config

# Allow necessary ports through UFW

echo "Configuring firewall rules..."

sudo ufw allow 6443/tcp

sudo ufw allow 30000:32767/tcp

sudo ufw allow 30000:32767/udp

# Enable K3s service

echo "Enabling K3s service..."

sudo systemctl enable k3s

sudo systemctl status k3s --no-pager

echo "K3s installation completed!"Here is a breakdown of what each command in the Install and Configure Cluster Script does:

- Installs K3s using the official installation script

- Creates and configures the Kubernetes configuration directory

- Opens required ports in the firewall

- Enables and starts the K3s service

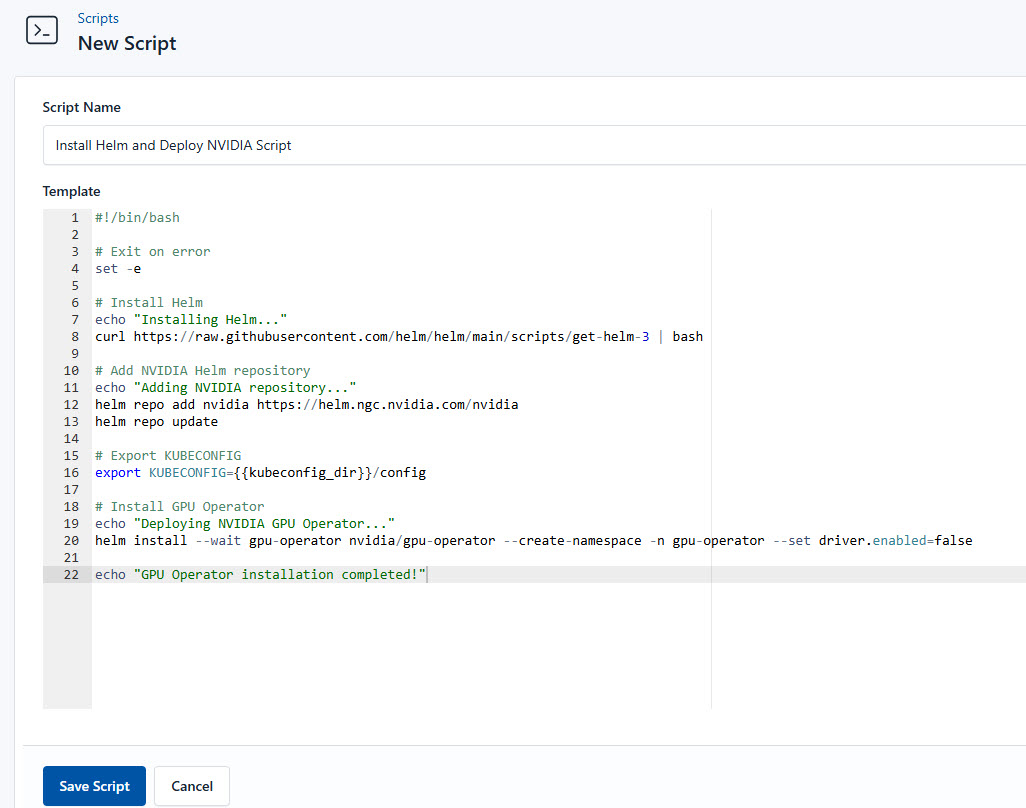

Install Helm and Deploy NVIDIA Script

If you plan to run GPU-accelerated workloads, you can install Helm and deploy the NVIDIA GPU Opertor.

Similarly, follow these steps to deploy NVIDIA:

- Go to Scripts > New Script

- Name:

Install Helm and Deploy NVIDIA Script - Add code:

#!/bin/bash

# Exit on error

set -e

# Install Helm

echo "Installing Helm..."

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

# Add NVIDIA Helm repository

echo "Adding NVIDIA repository..."

helm repo add nvidia https://helm.ngc.nvidia.com/nvidia

helm repo update

# Export KUBECONFIG

export KUBECONFIG={{kubeconfig_dir}}/config

# Install GPU Operator

echo "Deploying NVIDIA GPU Operator..."

helm install --wait gpu-operator nvidia/gpu-operator --create-namespace -n gpu-operator --set driver.enabled=false

echo "GPU Operator installation completed!"This is what the Install Helm and Deploy NVIDIA Script does:

- Installs Helm, a package manager for Kubernetes

- Adds the NVIDIA Helm repository and updates it

- Deploys the NVIDIA GPU Operator to enable GPU workloads in K3s

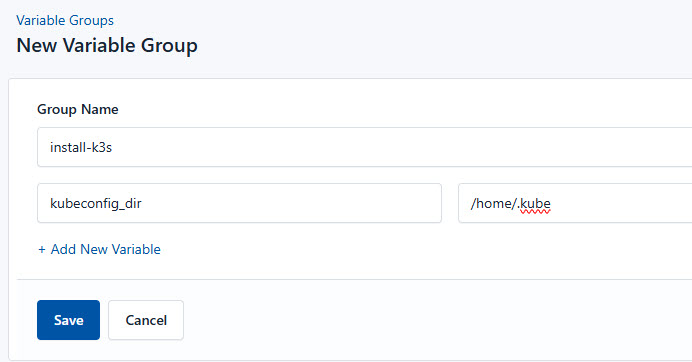

Create a Variable Group

Now, before running the scripts, you need to define values for the placeholders {{kubeconfig_dir}} used in the scrips. CloudRay processes all scripts as Liquid templates. This allows you to use variables dynamically across different servers.

To ensure that these values are automatically substituted when the script runs, follow these steps to create a variable group:

- Navigate to Variable Groups: In your CloudRay project, go to “Scripts” in the top menu and click on “Variable Groups”.

- Create a new Variable Group: Click on “Variable Group”.

- Add the following variables:

kubeconfig_dir: This is the path where kubernetes configuration files are stored

Since the variables is setup, proceed with running the scripts with CloudRay

Running the Script with CloudRay

You can choose to run the scripts individually or execute them all at once using CloudRay’s Script Playlists. If you prefer to run them individually, follow these steps:

Run the Install cluster script

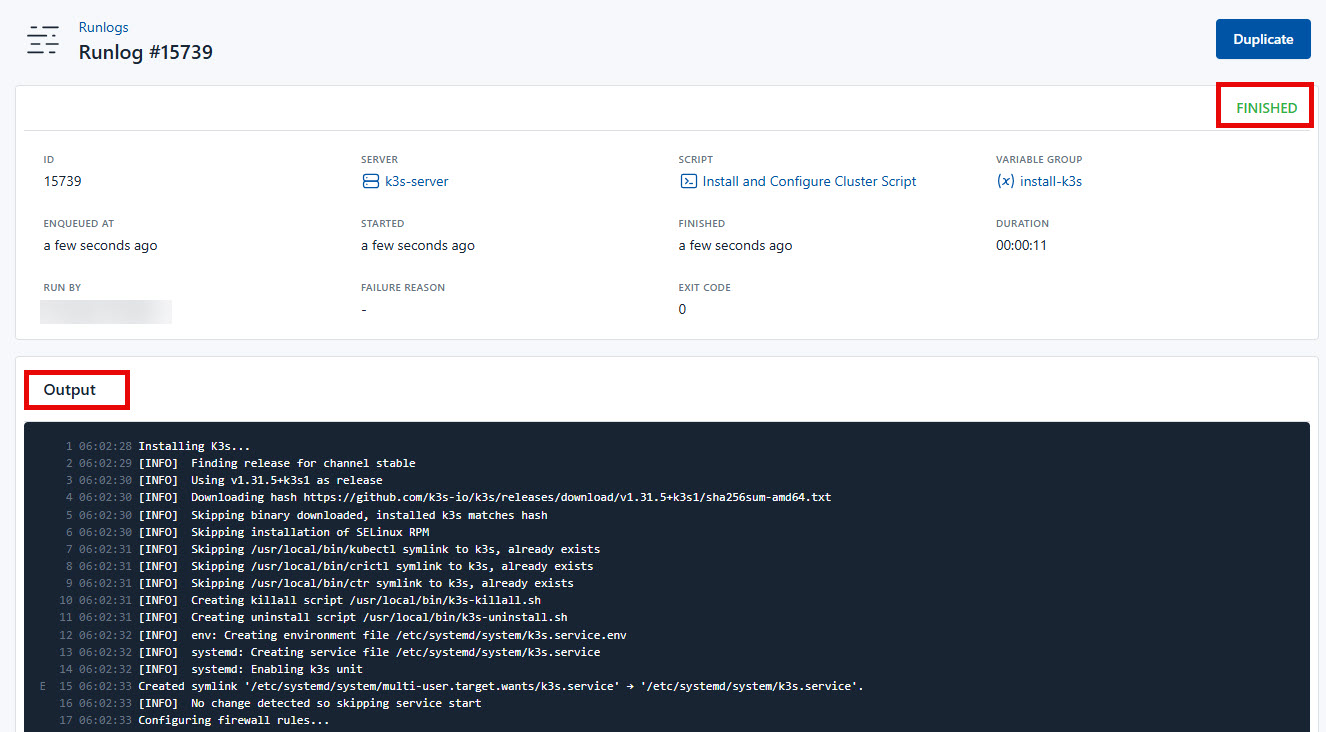

CloudRay uses Runlogs to execute scripts on your servers while providing real-time logs of the execution process.

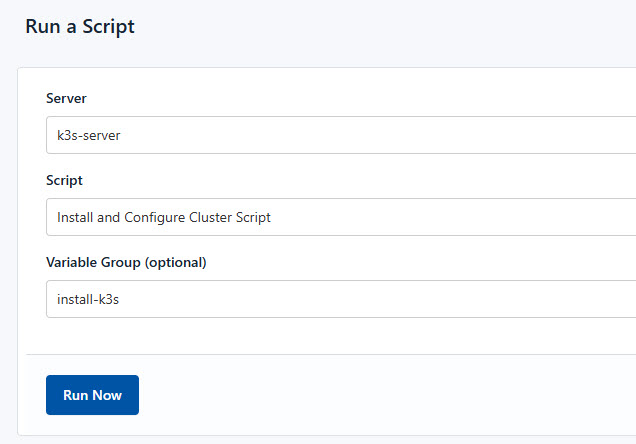

To run the Install and Configure Cluster Script, follow these steps:

- Navigate to Runlogs: In your CloudRay project, go to the Runlogs section in the top menu.

- Create a New Runlog: Click on New Runlog.

- Configure the Runlog: Fill in the required details:

- Server: Select the server you added earlier.

- Script: Choose the “Install and Configure Cluster Script”

- Variable Group (optional): Select the variable group you created earlier.

- Execute the Script: Click on Run Now to start the execution.

CloudRay will automatically connect to your server, run the Install and Configure Cluster Script, and provide live logs to track the process. If any errors occur, you can review the logs to troubleshoot the issue.

Run the Install and Deploy NVIDIA script

However, if you wish to run GPU-accelerated workloads, you can run the Install Helm and Deploy NVIDIA Script.

To run the Install Helm and Deploy NVIDIA Script, follow similar steps:

- Navigate to Runlogs: In your CloudRay project, go to the Runlogs section in the top menu.

- Create a New Runlog: Click on New Runlog.

- Configure the Runlog: Provide the necessary details:

- Server: Select the same server used earlier.

- Script: Choose the “Install Helm and Deploy NVIDIA Script”.

- Variable Group: Select the variable group you created earlier.

- Execute the Script: Click on Run Now to deploy your application.

Your lightweight Kubernetes cluster with K3s is now deployed and ready for GPU-accelerated workloads with the NVIDIA GPU Operator!

Troubleshooting

If you encounter issues during deployment, consider the following:

- K3s Service Not Running: Check service status with

sudo systemctl status k3sand restart with sudosystemctl restart k3s - Firewall Issues: Verify that required ports (6443, 30000-32767) are open using

sudo ufw status - NVIDIA GPU Operator Deployment Issues: Check logs with

kubectl logs -n gpu-operator -l app=nvidia-gpu-operator

If you still experience issues, refer to the official K3s Guide and NVIDIA GPU Operator Documentation for more details.

Related Guide

Olusegun Durojaye

CloudRay Engineering Team